The Model Context Protocol (MCP): The “USB-C” Standard for AI Connections

-

date_range 29/10/2025 19:15 info

How MCP could make connecting AI systems to tools and data as simple as plugging in a cable.

The Growing Problem of AI Integration

As AI systems get more capable, they also get more complicated. Every new app wants to connect to dozens of APIs, databases, and tools — and each connection comes with custom code, fragile configs, and maintenance pain. What if there were a universal way to make all those connections just work?

That’s the idea behind the Model Context Protocol (MCP). Some call it the “USB-C for AI,” and honestly, that analogy fits perfectly. Just as USB-C standardized how hardware devices communicate, MCP aims to standardize how AI systems connect to external data sources, APIs, and services — cleanly, securely, and dynamically.

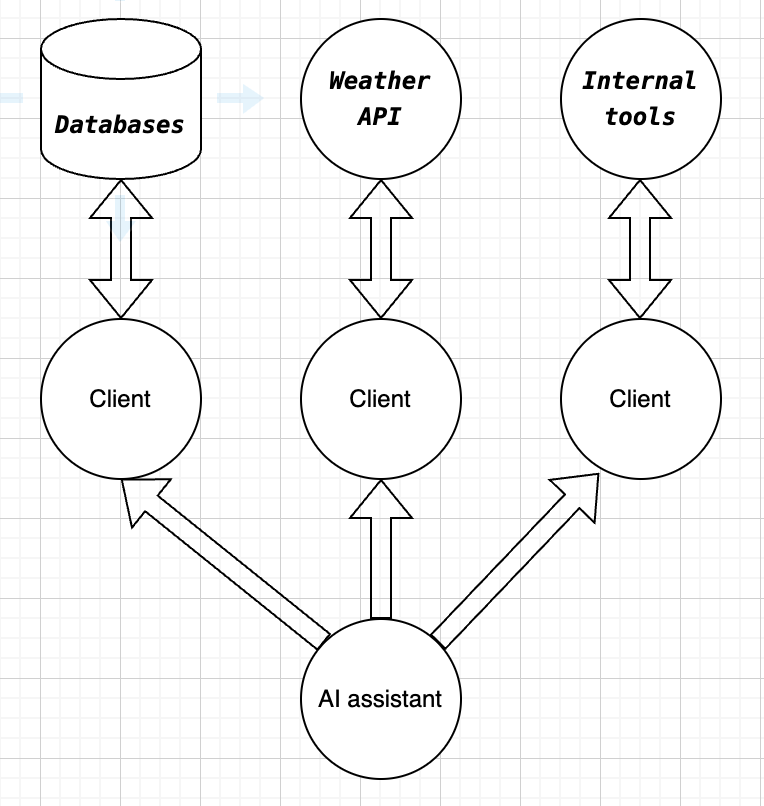

The Core Architecture: Host, Client, and Server

At its heart, MCP follows a modular client–host–server architecture.

- The Host (like an AI-powered IDE, chatbot, or development environment) acts as the central orchestrator that coordinates everything.

- The Client, embedded inside the host, securely manages communication with one or more servers.

- Each Server is a small, focused program that provides access to specific tools, APIs, or datasets.

This design enforces clear boundaries between components, keeps communication secure, and makes it easy to combine multiple services in a single AI-powered application.

Visualizing the Flow

Picture it like this: AI Assistant (Host) → talks to → Client → connects to → Server A (database), Server B (weather API), or Server C (internal analytics).

Each server describes its capabilities — its “menu” of available tools, prompts, or data — and the client shares that with the host. The host (and the AI model behind it) can then decide how to use those capabilities.

The best part? You can add or remove servers anytime without breaking the rest of your setup. It’s truly plug-and-play.

The Power of Dynamic Discovery

Traditional APIs are rigid. Once endpoints and parameters are defined, any update risks breaking client code. MCP flips that model entirely.

When a client connects to a server, it doesn’t assume anything. Instead, it asks:

“What can you do?”

The server responds with a full description of its capabilities — the tools it provides, input parameters, available resources, and so on. If new features get added later, the client simply re-discovers them.

This concept of runtime capability discovery makes MCP systems self-describing and resilient. It’s a flexible, living protocol that can evolve without breaking integrations — which is perfect for the fast-moving AI world.

Why MCP Matters

MCP isn’t just a new API spec — it’s a shift in how we think about AI architecture. Instead of tightly coupled systems that constantly break when something changes, we get modular, composable AI components that can safely interact, grow, and evolve together.

As more AI frameworks and assistants adopt MCP, it could quietly become the backbone of AI interoperability — the unseen connector that lets models, tools, and data flow together effortlessly. It’s still early days, but MCP might be the quiet revolution that standardizes how AI really talks to the world.

Further Reading & Next Steps

If you want to dig deeper into how MCP works and how to build your own servers, check out:

- 🔗 Visual Guide to Model Context Protocol (Daily Dose of Data Science) — a great visual intro to MCP concepts.

- 💻 OpenAI MCP GitHub Repository — the official spec and examples.

- 🧩 LM Studio + MCP Integration — explore how local LLM environments are adopting the protocol.

- 📘 Developer Docs for Building MCP Servers — a hands-on example of MCP.

MCP is still evolving, but it’s already showing what the future of AI connectivity could look like: open, modular, and universal.